At the end of October, two significant events focusing on the intersection of Artificial Intelligence (AI) and ethics and good practices took place at the Barcelona Biomedical Research Park (PRBB) . These events brought together leading experts, policymakers, and researchers to critically examine how AI is shaping the future of scientific research and what ethical guidelines should govern its use.

The two events—each with its own unique focus—aimed to address the ethical challenges of AI in research, provide insights into current EU regulations, and reflect on a responsible approach to integrating AI tools in scientific work.

Furthermore, the first episode of the new podcast series at the PRBB, “Science with a view”, also focused on AI in research, and the use the community does of these tools (you can hear it here).

“Artificial Intelligence and Ethics, the big wave”

The first of these events, held on October 30th, was the ERION-EARMA annual meeting, co-organised with the Barcelona Institute for Global Health (ISGlobal), and the PRBB Good Scientific Practice group, which focused on the ethical and regulatory aspects of AI in research. With a strong emphasis on Europe’s regulatory landscape, the event brought together an impressive lineup of speakers from across Europe to discuss the balance between fostering AI advancements and safeguarding human rights and societal values.

The morning session was open to all and more centred on AI tools development. It kicked off with Dorian Karatzas, Former Head of Research Ethics and Integrity Sector, DG Research & Innovation at the European Commission, who provided valuable insights into what research managers can do to promote ethics and integrity in AI research. Following this, Prof. Dirk Lanzerath from the University of Bonn explored the ethical governance of research in AI, drawing from his expertise in the iRECS project.

Speaker Mara de Sousa Freitas from the Universidade Católica Portuguesa talked about the work of the CoARA-ERIP group (Ethics and Research Integrity Policy in Responsible Research Assessment for Data and Artificial Intelligence).

And Juliana Chaves-Chaporro from UNESCO explored the societal challenges of balancing AI advancements with human rights, in particular regarding marginalized groups and regions. Chaves-Chaporro presented UNESCO’s first-ever global standard on AI ethics – the ‘Recommendation on the Ethics of Artificial Intelligence’, adopted in 2021, which, among other things, proposes an ethical impact assessment for the AI development teams, asking questions such as Who is most likely to be adversely affected by this AI system? What form will these impacts take? What can be done to prevent these harms, and have resources been allocated to this harm prevention?

After a coffee break, Lisa Häberlein, from the European Network of Research Ethics Committees (EUREC), delved into the ethical and regulatory challenges AI poses in digital healthcare in developing regions like Sub-Saharan Africa. She presented the STRATEGIC project, which aims to build capacity and raise awareness through the development of tailored training materials for ethics and regulatory evaluations in that context, with a focus on patient privacy and data security, informed consent – especially with diverse languages – and mitigation of biases to ensure equitable healthcare delivery.

Kaisar Kushibar followed on, presenting the FUTURE-AI guidelines for trustworthy and deployable artificial intelligence in healthcare, generated by an international community of data scientists, clinicians, ethicists, social scientists and legal experts. He showed how, despite the promises of AI in healthcare, the great majority of doctors are not using it, due to worries over the (lack of) robustness, fairness, security and transparency of the tools. Hence, these guidelines aimed at how medical AI tools should be designed, developed and validated to be Fair, Universal, Traceable, Usable, Robust and Explainable. Through consensus, 30 best practices were defined, addressing the technical, clinical, socio-ethical and legal dimensions of trustworthy AI. It is very important to engage all stakeholders, Kushibar said, to remove preconceptions and help identify the sources of bias and ethical risks in each context, to avoid unexpected surprises.

Several of the speakers highlighted the need to engage all stakeholders and to work together with researchers from different disciplines, in order to identify the sources of bias and ethical risks in each context.

Shereen Cox, from the Centre for Medical Ethics, University of Oslo, Norway, was next on the topic of extended reality, further broadening the conversation about the global impact of AI and taking the ethical issues to a whole other dimension – or a whole other reality.

The topic of sex and gender bias in Artificial Intelligence for health was discussed by Atia Cortés, from the Barcelona Supercomputing Center (BSC) and member of the Bioinfo4Women initiative. She emphasized on the one hand the importance of understanding sex and gender differences that were medically relevant. And on the other hand, how we should ensure the proper identification, evaluation and mitigation of biases – from the moment of the data collection – in order to ensure trustworthy AI.

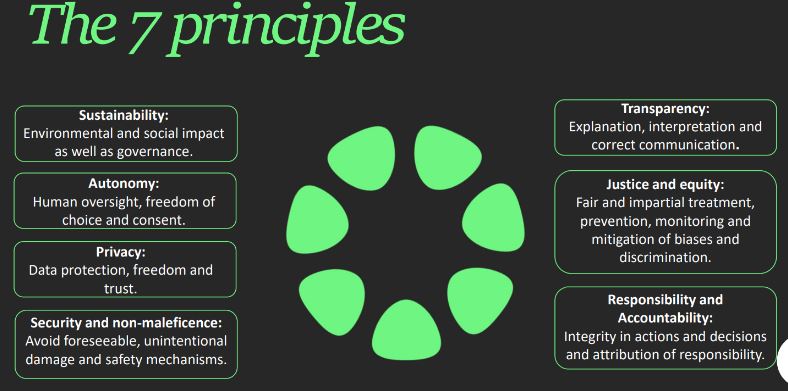

Finally, Alexandra Lillo, from the Artificial Intelligence Ethics Observatory of Catalonia (OEIAC) offered a legal view on AI use. She presented their self-assessment tool “PIO Model”, a tool useful for both developers and users, she said. Based on 7 principles, and with over 130 questions, the PIO Model is based on the applicable regulations and ethical and legal recommendations, and it aims to help users to identify if an AI system complies with legal and ethical principles and what is its level of risk (likelihood of harm).

With the morning part over, the event continued with a networking lunch and an afternoon workshop only for ERION members, where discussions continued about how AI and ethics can be meaningfully integrated into the research community.

AI in Scientific Writing

The second event, which took place the next day, was a course designed to critically discuss the role of AI in scientific writing. This workshop was aimed at helping researchers understand the guidelines and rules surrounding the use of AI tools in writing research projects, manuscripts, literature reviews or grant applications. Led by Thiago Carvalho and David del Álamo Rodríguez from Fellowsherpa, the course explored the concept of authorship, the accountability that comes with it, and why therefore, a generative AI tool like ChatGPT could not be considered an author of a paper.

Participants discussed the main advantages and uses of generative AI technology in research – in writing, translating, generating images or even literature review or helping with brainstorming. They then came up with their own best practices for AI use – like never feeding confidential data to an AI algorithm and always checking the results and references given – which were compared to existing guidelines from journals and funding agencies. The main message was that the ultimate responsibility is the researcher’s, whichever tools they use, so participants were encouraged to think critically about the potential risks of over-reliance on AI and the importance of maintaining scholarly integrity.

“AI is, at the end of the day, a tool. So it is down to researchers to use it responsibly and avoid over-relying on it”

Walking together towards a responsible use of AI in research

Both events underscored the increasing importance of AI in scientific research and the need to integrate ethical guidelines that ensure these tools are used responsibly.

AI has the potential to drive significant breakthroughs in research, but it also presents ethical dilemmas, from bias and fairness to privacy concerns. At the PRBB, we are committed to fostering a space where these conversations can take place, involving all stakeholders—from researchers to policymakers—so that we can collectively move forward with a balanced and responsible approach, ensuring that these transformative tools benefit society in ways that are both effective and ethical.